Most of my experience with AI had been retrofitting: Incorporating AI into existing software and codebases.

Yeah, what a mess. I imagine it’s like it was trying to electrify existing factories. The habits, workflows, assumptions, etc. were just too different.

Contrast that with starting a new project with AI so everything is AI-native and code is AI-maintainable from the start. It’s such a different experience.

90% planning / 10% execution

What you’ll realize is that you’ll spend a TON of time upfront on design and planning since AI can execute (write code) so quickly. And the special sauce comes down to the rules, which drive everything else.

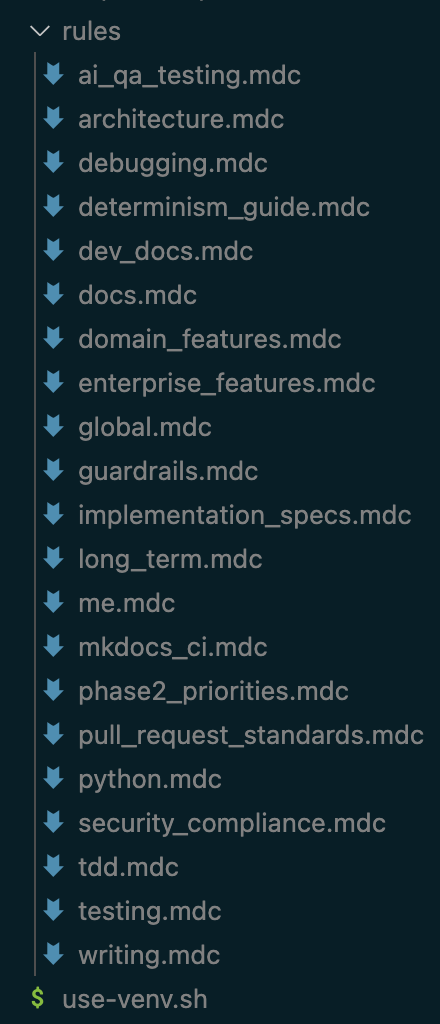

E.g. in Cursor these are .mdc files that dictate how the AI should operate.

For example, here are mine for a small python project. Some are always used, some are pulled in intelligently, some are only manually added. Messy, but functional.

These tell AI exactly how to operate in what situations and why.

In turns out that doing this is just a best practice in general. Every company should have these anyway. And once the company is big enough, there will be subsets of rules by team, project, codebase, etc.

Pre-AI messiness = low standards

And you know what I realized? Part of the complexity of embracing AI is that most companies DON’T have this. There is a lack of alignment, scattered codebases, complex undocumented dependencies, etc.

That’s why it’s so much easier to build something new because you have a clean slate where everything is aligned.

In effect, part of making something ‘AI-maintainable’ is raising the standard above what most organizations are used to. This tends to work because humans are great at managing the ambiguity. But with AI we have to embrace the limitation in order the unlock the leverage and opportunities it provides.

What about the AI promise?

Therefore all of these fabled AI productivity gains are gated by the ability to navigate this transition, which is complex:

- Training employees to learn new behaviors

- Accepting that these behaviors will continue to evolve as AI does

- Aligning best practices and codifying standards

- Making existing codebases ‘AI-maintainable’ (or faster to rewrite them? IDK)

Policy as code

But this goes beyond just codifying dev best practices in rules. Long-term this means codifying important company context for alignment across employees and AI: everything is documented for full context that any AI can plug into and every employee understands.

Again, this should happen anyway, but humans are imperfect, organizations are complex and this rarely happens. In fact, I’d wager that misaligned strategy and incentives create the most friction, particularly in large organizations.

For example, one historic source of power is tribal knowledge that graybeards held, but that sort of behavior becomes untenable with AI since missing context is nonexistent and will quickly get papered over with something universally understood.

A new opportunity

You would think something like MS Office or Notion would be the emerging source of power to manage this context. But it’s actually the opposite: Those products encourage MORE documents, which need to be managed, are of varying quality, largely duplicative and minimally useful in the grand scheme of things.

Source of truth

What really matters is a handful of ‘source of truth’ documents, the 1% that guides 99% of the work at the company.

Sure, employees may create new documents each day as memos, status updates, proposals, etc. but this system should automatically ‘sanity’ check everything to help folks stay aligned. Almost like a spell check with suggestions. And if there’s enough misalignment (e.g. spelling suggestions), it’s a signal to leaders that their org is out of alignment (top down) or the ‘source of truth’ is outdated (bottom up) and needs to be updated. This applies to code or strategy alike.

If you’ve worked in a large org for any length of time, this lack of alignment is one of the most pervasive problems. Solving (or at least improving) this alone would significantly boost productivity and reduce churn within organizations.

The new consultants: Making companies AI native

This seems like a new consulting opportunity to help companies transition like this. Imagine helping companies:

- Draft their coding rules by codifying their existing best practices + AI native ones

- Working with teams to build their first new features with those rules

- Then once teams are experienced, migrating existing code bit by bit

The migration part sounds like an automation opportunity, but ‘migrating’ isn’t just about code, but rather training the teams who own that code to work in the new way.

And just like with any technological change, those who can’t adapt should take on different roles more suited to their abilities.

The end result of the process is a clear framework and coaches/teams trained to implement it across the company.

Code is the easiest part because it’s verifiable. But you can imagine the same process happening with the rest of the company, migrating everything to the 1% source of truth that drives the other 99% of the work.

AI makes better software & companies

It turns out the code doesn’t matter. It seemed like it when it was precious (why GitHub became so popular) since it was all handwritten. Can you believe we use to write all code by hand? Wow.

But it turns out, the most important thing is the people and processes. And we took that all for granted, doing it all inefficiently because we could. But now that we all have these 1,000 HP coding engines called AI

There are no more ICs. We’re all managing agents. So a little misalignment is a lot more expensive. We need better rules to guide ourselves and the AI.

In fact, I’d wager that 50% of the ‘GDP unlock’ that comes from AI will simply come from better alignment, because the rules will align people and AI. And it’ll be hard to believe how we ever tolerated so much misalignment in the past.