TL;DR

Time-based estimates don’t make sense when your pair programmer is an AI.

So, I switched to ETM (estimated tokens to merge). It’s the agent’s token budget to design, implement, and polish a feature until it’s merge-ready.

Doing that helped flip to more of an AI native, agent first mindset that’s helping me embrace a new way of working.

Why estimated tokens to merge (ETM)?

By default Cursor always gave me estimates in time (e.g. hours, weeks, months, etc.), which doesn’t really make sense in the context of the agent with AI doing the coding.

So I switched to ETM (est. tokens to merge) and it’s much more useful. This is the agent’s token budget to implement, test, and polish this change until it could be merged.

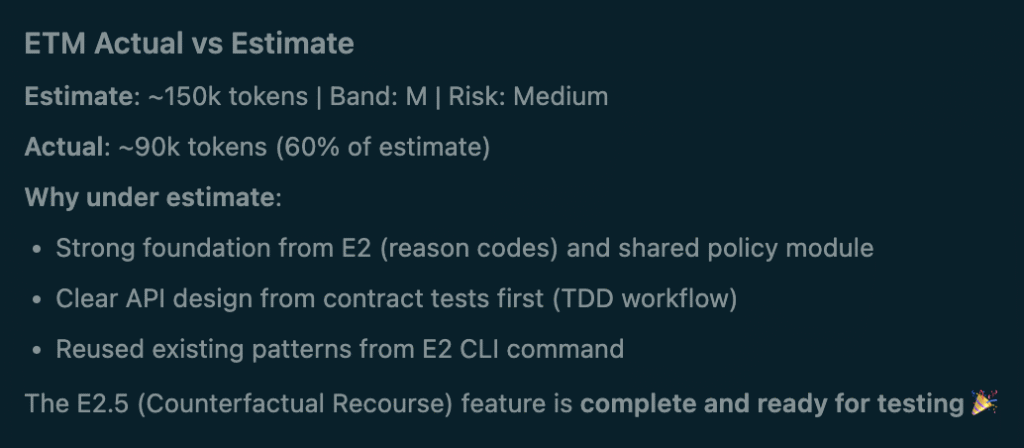

Example from a post-implementation summary today:

Token calibration

The more I use it, the better of a feel I get for it. It’ll also be interesting to see how it changes over time as models evolve as I need to adjust the calibration on the bands.

Here are the current token calibration bands I’m using:

- XS: 20–50k

- S: 50–120k

- M: 120–300k

- L: 300–700k

- XL: 700k+

The use of reasoning, especially now with Sonnet 4.5 🧠 (thinking) pushes the token calibration bands higher, especially on big implementations.

Not quite vibe coding?

This is my first “all in” agent coding project. It’s not quite vibe coding since I’m being very deliberate and explicit in design, planning, setting rules and iterating on them + reviewing everything. For example, I’m using:

- Explicit architecture (extensibility rules, plugin patterns, boundaries)

- TDD workflow with contract tests and determinism requirements

- Comprehensive rule system (10+

.mdcfiles defining everything) - Quality gates (

mypy --strict, reproducibility, byte-identical outputs) - Documentation requirements (changelog, user guides, cleanup checklists)

- Review & learn approach since I’m newer to Python and AI interpretability/explainability, which I’ve defined in a “

me.mdc” that always asks “why” to build understanding

So even though it’s not traditional vibe coding, it’s forcing me to trust the agent more since so much is intentionally new.

Knowing that this is the worst AI will ever be, I just don’t see how this isn’t the future.

Other observations

Below are some early observations I might write more on later.

What the work is

Another interesting observation is instead of the coding being my work, my work is reviewing the rules, design, architecture, challenging, researching, etc., which forces more improvement and iteration on the rules, etc.

I’m traditionally a PM and used to working with senior devs, EMs and architects, so the process feels surprisingly natural to me.

It’s not cheap

I blew through my Cursor Ultra quota very quickly, so this isn’t cheap as far as software tools go, but it’s very cheap compared to getting this level of effort/support from a human.

New hands

Evolving with the technology has always been a thing humans had to do, especially these days as the rate of change increases.

But this experience shows me that this doesn’t really replace developers. It just gives developers new, more capable “hands” to write code instead of writing it manually.

It also gives non-coders hands that know how to code, but it doesn’t work miracles, you still have to bring something to the table in defining the what/how around building.

Long-term maybe the AI will get even better at the how, and the only remaining differentiator may be the ‘what’ (taste) and the capital to pay for high quality agents.

Just another level of abstraction

In a way, AI agents are just another layer of abstraction for developers, and higher levels of abstraction is what technology has always done. More leverage, more productivity, not without tradeoffs as with any technology.

Optimizing for the long-term

I added a rule to always optimize for the best long-term solution. The agent often proposes quick fixes, but when iteration is cheap, there’s no reason to choose hacks over durable design.

Package first

A solid product development process feels like “package first” development. As a PM of user-facing products, this felt counter-intuitive. I would always start with mocks, which drove everything else.

But the way agents work, doing things “package-first” to lock down the engine, logic, etc. first works much better at this stage of AI capabilities. It adds helpful constraints and saves the UI, which requires a different kind of ‘taste’ until later.

Still further to go

As good as the AI (Cursor + Sonnet 4.5) is, there’s still a lot further to go. I catch a lot of things, but they all feel very solvable and the progress is extremely tangible (it’s only a matter of time).

Still learning

I’m still in the middle of it, so we’ll see how it goes and how my opinions evolve. But as of now, wow, I am extremely impressed.

Switching to “estimated tokens to merge (ETM)” was a great way to flip the switch in my brain to think about building things in a more AI-native, agent-first way.

For me ETM isn’t just a metric, but a mindset shift for building in the AI era.